Spark supports the following compression options for ORC data source.

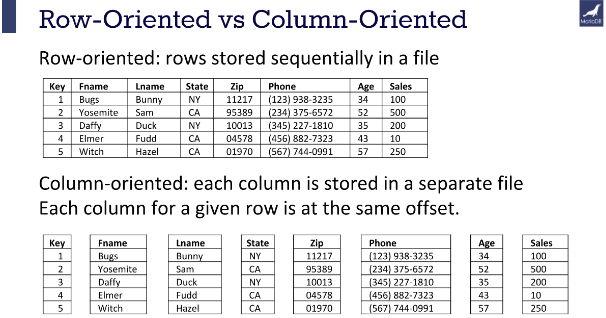

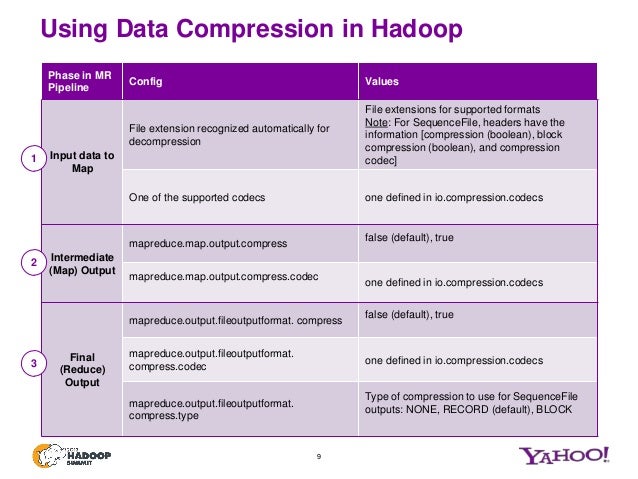

Fast reads: ORC is used for high-speed processing as it by default creates built-in index and has some default aggregates like min/max values for numeric data.Reduces I/O: ORC reads only columns that are mentioned in a query for processing hence it takes reduces I/O.Compression: ORC stores data as columns and in compressed format hence it takes way less disk storage than other formats.ORC file format heavily used as a storage for Apache Hive due to its highly efficient way of storing data which enables high-speed processing and ORC also used or natively supported by many frameworks like Hadoop MapReduce, Apache Spark, Pig, Nifi, and many more. This is similar to other columnar storage formats Hadoop supports such as RCFile, parquet. ORC stands of Optimized Row Columnar which provides a highly efficient way to store the data in a self-describing, type-aware column-oriented format for the Hadoop ecosystem. We hope this article has provided you with a useful overview of some of the top splittable compression formats for Hadoop input, and has helped you make an informed decision about which format is right for your needs.PySpark Tutorial For Beginners (Spark with Python) What is the ORC file? Ultimately, the best option for your specific use case will depend on a variety of factors, including the size and type of data you are working with, the level of compression you require, and the compatibility of the compression format with your existing tools and systems. Gzip, Snappy, and LZO are all viable options, each with its own set of pros and cons. In conclusion, if you are looking for the best splittable compression format for Hadoop input that starts with a Bz2 file, there are several options to consider. Additionally, LZO is not as widely used as some other formats, which means that there may be compatibility issues with certain tools or systems. This means that if storage space is a concern, LZO may not be the best option. One downside of LZO is that it does not compress as well as some other formats, such as Bz2. LZO is also well-suited for compressing text files, as it handles repeated patterns well. LZO is splittable, compresses and decompresses quickly, and has a relatively high compression ratio. LZO is a compression format that is designed for high-speed compression and decompression, making it well-suited for use with Hadoop. Additionally, Snappy is not as widely used as some other formats, which means that there may be compatibility issues with certain tools or systems. This means that if storage space is a concern, Snappy may not be the best option. One downside of Snappy is that it does not compress as well as some other formats, such as Bz2. Snappy is also well-suited for compressing text files, as it handles repeated patterns well. Snappy is splittable, compresses and decompresses quickly, and has a relatively high compression ratio. Snappy is a compression format that was developed by Google for use in their distributed computing systems, including Hadoop. Additionally, Gzip is not ideal for compressing text files, as it does not handle repeated patterns well. This means that if storage space is a concern, Gzip may not be the best option. One downside of Gzip is that it does not compress as well as some other formats, such as Bz2. Gzip files also have a relatively high compression ratio and are fast to compress and decompress. Gzip is splittable, which means that a large Gzip file can be split into smaller chunks, allowing for parallel processing by Hadoop. Gzip is a popular compression format that is widely used in the Linux and Unix world.

So, what is the best splittable compression format for Hadoop input if you are starting with a Bz2 file? In this article, we will explore some of the top options and discuss their pros and cons. However, Bz2 files are not splittable, which means that a single Bz2 file cannot be split into smaller chunks for parallel processing by Hadoop. Bz2 is a popular compression format that offers a good balance between compression ratio and speed. One compression format that is commonly used for Hadoop input is Bz2. However, not all compression formats are created equal, and some are better suited for the Hadoop environment than others. Compressing these files can help reduce storage and processing costs, as well as improve performance.

Snappy compression format software#

As a data scientist or software engineer working with Hadoop, you may have come across the need to compress large files that are input to your Hadoop cluster.

0 kommentar(er)

0 kommentar(er)